OpenAI’s Sora is finally here, with a Turbo version to boot

Sora’s launch should excite users not just for its features but also for the strides it marks toward AGI.

On the third day of OpenAI’s “shipmas,” the artificial intelligence powerhouse finally launched its Sora video generation model, along with Sora Turbo, an advanced version promising faster video production speeds.

Initial reports suggest that the first-generation Sora could generate one second of video in 10 seconds. Sora Turbo dramatically improved this capability, producing four 10-second videos simultaneously in just 72 seconds during a live demonstration. The updated model also supports text-, image-, and video-to-video generation at reduced costs.

Subscribers to OpenAI Plus and Pro memberships can access Sora Turbo without additional fees, though usage and video quality vary by tier:

- OpenAI Plus members, who pay USD 20 monthly, will receive credits to generate up to 50 videos per month, with resolutions capped at 720p and a maximum duration of 5 seconds per video.

- OpenAI Pro members, who pay USD 200 monthly, can enjoy unlimited standard-speed video generation, priority credits for up to 500 videos per month, and enhanced options for creating high-resolution videos up to 1080p and 20 seconds long. They can also download outputs watermark-free and generate up to five videos concurrently.

Currently, Sora is available in 155 countries and regions but excludes mainland China and most of Europe. Following its release, a surge in demand temporarily overwhelmed OpenAI’s servers, prompting CEO Sam Altman to suspend new user registrations and slow video generation speeds to stabilize the system.

Sora: A tool for creativity and iteration

The OpenAI team positions Sora as a creative tool for generating videos from textual descriptions, images, or video clips. However, they stress that Sora is not designed to create full-length movies with a single command. Instead, the tool requires iterative optimization, allowing users to refine their outputs step by step.

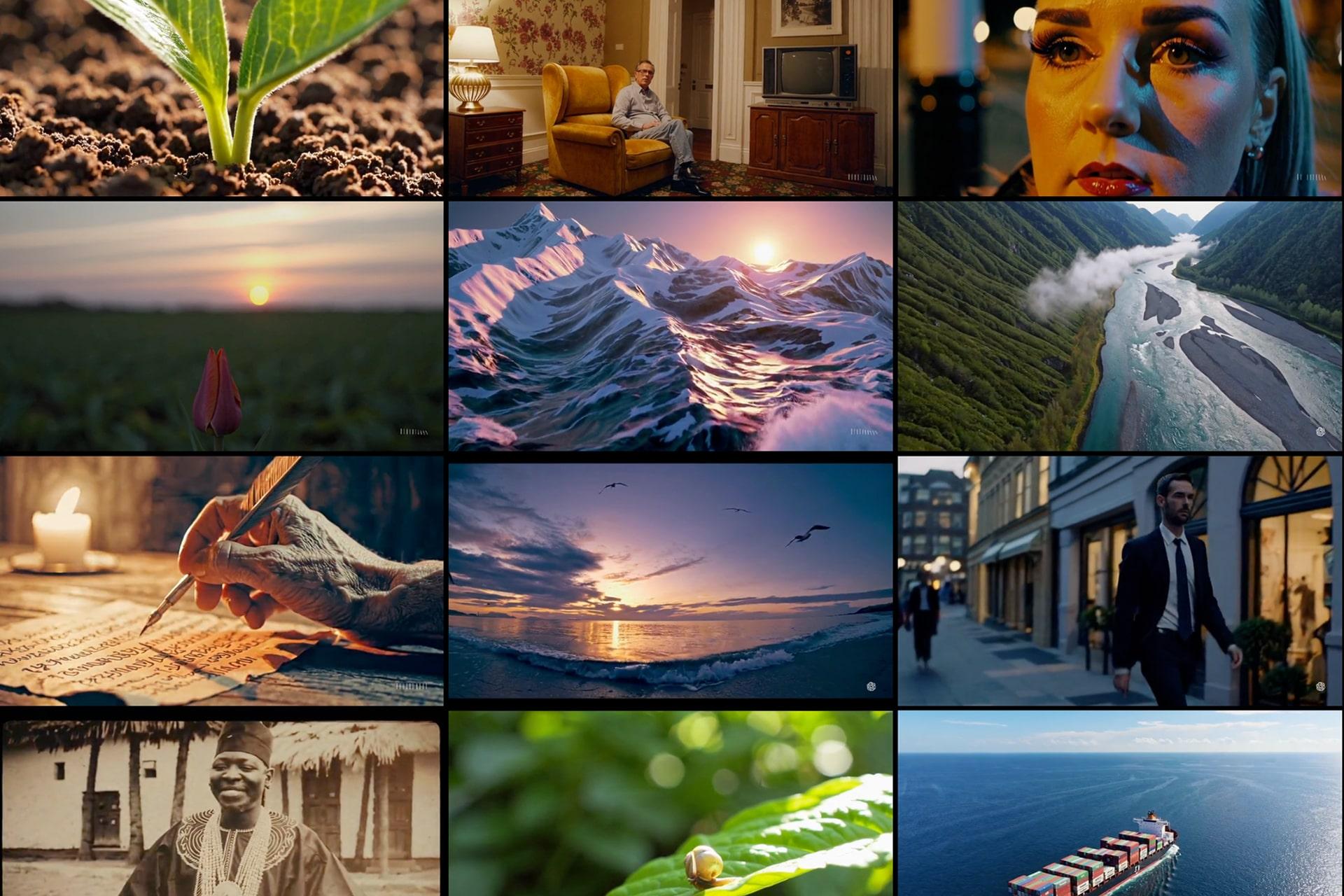

To introduce Sora’s capabilities, OpenAI transformed its launch event into an interactive live tutorial. Users start the video creation process by accessing the storyboard feature, which showcases four videos to illustrate various details from different perspectives.

Within the storyboard, users can input their desired instructions, specify the style, aspect ratio, duration, number of scenes, and resolution, and then proceed to generate their video. This setup enables a detailed and flexible approach to video creation, accommodating diverse creative needs.

Sora currently supports video generation up to 20 seconds long with resolutions of 1080p. Aspect ratio options include 16:9, 1:1, and 9:16, providing flexibility for different creative formats.

According to OpenAI, concise user instructions tend to produce outputs rich in detail, while more specific and elaborate instructions result in videos closely aligned with the input. During a live demonstration, Sora was prompted to create a video featuring “a yellow-tailed white crane standing in a stream” for the first segment, followed by “the crane dips its head into the water and picks up a fish” for the next. Although the two segments were not continuous, Sora generated transition scenes to merge them seamlessly.

The resulting video adhered to the prompts, complete with smooth transitions and visual coherence. However, while the crane’s action of retrieving the fish was depicted with splashing water effects, the fish itself was not clearly visible in the video.

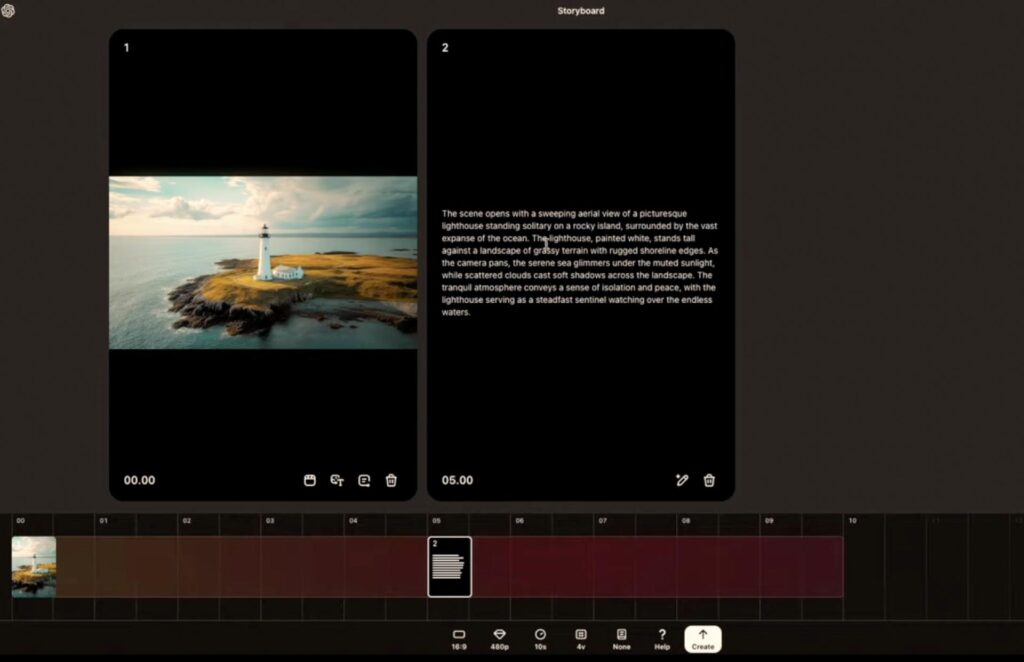

Users can also upload an image or video as a starting point, and Sora will generate a follow-up description for the next segment. This allows users to refine the instructions or adjust the timeline to control when the new segment will appear within the video.

For instance, after uploading an image of a lighthouse, Sora generates a descriptive card outlining the next video segment to be created. Users can modify the generated instructions and reposition the segment on the timeline, providing greater control over the flow and sequencing of their video. This feature enables a seamless integration of user inputs and system-generated content, enhancing creative flexibility.

Once the initial video is generated, users can further refine it using the remix tool. This feature allows for modifications such as replacing a mammoth with a robot or altering a character’s expression. To accommodate varying levels of adjustment, Sora offers three intensity settings: subtle, light, and strong, enabling users to tailor the changes to their specific needs.

If users are satisfied with specific parts of a generated video, they can use the recut tool to extract and expand those segments with new instructions, effectively creating entirely new videos. Sora also includes a loop feature for infinite playback with smooth transitions and an advanced blend feature for seamlessly merging distinct scenes.

More than a pool: A pathway to AGI

In February, OpenAI introduced the first-generation Sora, capable of generating high-definition videos up to one minute long from user prompts. After ten months of closed beta testing with select visual artists, designers, and filmmakers, Sora has officially launched. Hours before the live event, OpenAI unveiled a demo video of Sora Turbo.

During Sora’s beta phase, Chinese competitors such as Kuaishou’s Kling AI, ByteDance’s Dreamina (also known as Jimeng AI), and MiniMax’s Hailuo AI gained international recognition. According to analytics platform Similarweb, Kling AI received 9.4 million global visits in November, surpassing Runway’s 7.1 million. Following the release of the Sora Turbo demo, many users commented on the parallels between its outputs and those of Chinese counterparts.

OpenAI CEO Sam Altman acknowledged that updates to Sora were slower than expected, citing the need to ensure model safety and scale computational resources. To address these challenges, OpenAI has reportedly partnered with semiconductor firm Broadcom to develop AI chips, anticipated for launch as early as 2026.

OpenAI views Sora as a significant milestone toward artificial general intelligence (AGI). During the launch event, Altman emphasized the importance of enabling AI to understand and generate video, describing it as a transformative step for human-computer interaction and a critical component of AGI development.

Reactions to this vision have been mixed. Jiang Daxin, co-founder and CEO of Stepfun, commended OpenAI for advancing multimodal generative capabilities, aligning with similar AGI research paths. Conversely, Meta’s chief AI scientist Yann LeCun criticized the approach, arguing that simulating the world through pixel generation is resource-intensive and ultimately impractical.

Altman has indicated that the first applications of AGI could appear as early as 2025, enabling AI to tackle complex tasks across diverse tools. While he expects the initial impact of AGI to be modest, he anticipates its influence will eventually exceed public expectations, driving profound transformations with each technological leap.

As Sora’s adoption grows, its contributions to OpenAI’s AGI ambitions are likely to expand, positioning the company closer to its ultimate goal of redefining human-computer interaction through advanced AI capabilities.

#AIInnovation #SoraVideoGeneration #AGIDevelopment #OpenAITech #CreativeTools

- Art

- Causes

- Crafts

- Dance

- Drinks

- Film

- Fitness

- Food

- Spellen

- Gardening

- Health

- Home

- Literature

- Music

- Networking

- Other

- Party

- Religion

- Shopping

- Sports

- Theater

- Wellness